Brand safety is all too often limited to advertiser concerns about appearing on offensive content. But Product Marketing Lead Gavin Dunaway argues publishers also have brands to protect from harmful advertising.

Around Halloween 2019, I was catching up on politics on a major news publisher’s site when an image in a 970X90 banner ad stopped me dead in my scrolling. I flashbacked to anatomically accurate depictions of female genitalia shown in junior high sex education class. Except this genitalia appeared to be sitting on top of a person’s neck.

This mask was graphic. But I could not look away or resist the urge to click. Yes, I took the risk that the same ad might follow me across the web for weeks. (Beware: the retargeting curse…)

“Full-head clam shell mask” blared the landing page title. A side angle revealed a harmless sea shell that someone was wearing atop of their head. Front and center, however? Female anatomy, and definitely an intentional resemblance.

At the risk of being called a prude, I can’t imagine most people would enjoy being subjected to a “clam shell mask” ad while perusing the news. And I know publisher revenue folks would be horrified if this image appeared in front of their audiences. I can just imagine the screams from the editorial team.

Out of publisher control

Brand safety conversations too often focus on advertiser concerns when publishers are trying to shield consumers from offensive or harmful creative. As this ad proved, a premium publisher was serving me a tasteless ad. Yet, my curiosity was piqued—how did this explicit mask end up in front of me? If that was targeted advertising, what ever did I do online to make an advertiser make me think I’d want to purchase that?

The ad had been served by a well-known retargeting firm, though I’d never visited the site in question. Investigation revealed the same site also sold a variety of guitar equipment, something for which I’m very much the target market. So I was most likely part of a lookalike pool .However, the advertiser or its DSP had been careless in selecting relevant creative.

Which brings up the conundrum—did the publisher have any control over the creative in that situation? Thanks to real-time bidding, no one on the revenue team had any clue that specific creative was going to give me nightmares.

Sure, advertisers can use the IAB Content Taxonomy to pre-declare ad content. But if you’re serving a huge amount of creative dynamically—like a clam shell head mask—are you really going to spell out what might offend the end user?

Publishers and supply-side platforms are forced to have a lot of faith that their upstream partners (DSPs and buying platforms). The assume they are looking out for their best interests and complying with their acceptable creative policies. All too often, that faith is unwarranted and unwanted creative sails through the programmatic pipes. Publisher reputation and user experience is damaged, but the ultimate victim is the end user.

Brand safety goes both ways

I thought about the clam shell mask when I was reading the new brand safety report from the Global Alliance for Responsible Media (GARM), a World Federation of Advertisers initiative. Analyzing content across seven major social media platforms including Facebook, Instagram, Twitter, and YouTube, GARM discovered that more than 80% of the 3.3 billion removed posts fell into the categories of Spam, Adult & Explicit Content, and Hate Speech & Acts of Aggression.

That’s certainly alarming, but it’s only one side of the coin. When the ad industry talks about brand safety, it’s accepted that we’re referring to advertiser concerns about offensive web content.

However, publishers are brands too. Also, keeping publisher brands safe from offensive advertising is also protecting consumers. This is particularly important when publishers monetize through the open programmatic marketplace. In this case, they have limited control over the kinds of creative that shows up on their sites. (E.g., disturbing clam shell masks!)

Categorically bad ads

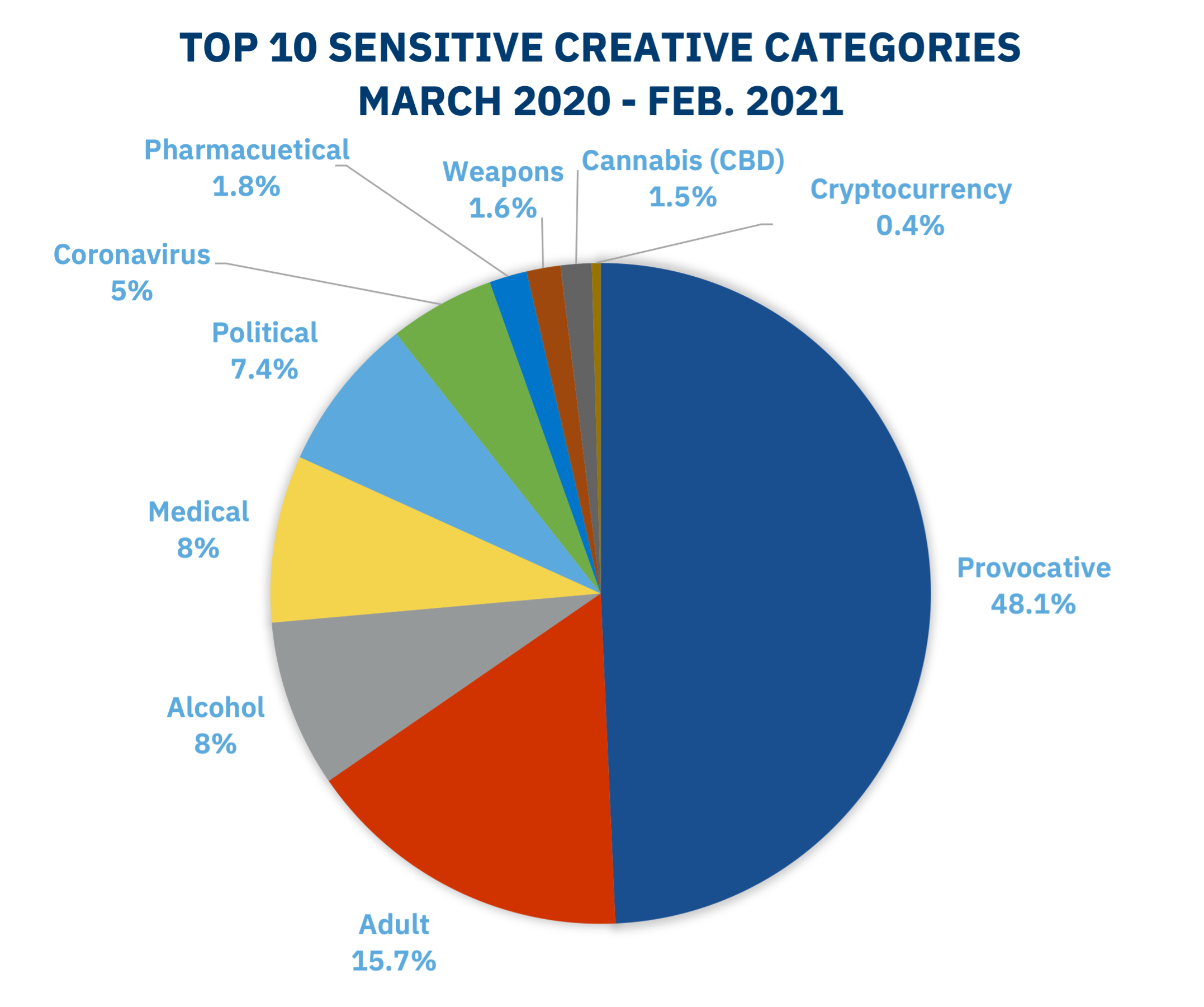

The Year in Sensitive Creative, a report from The Media Trust analyzed objectionable ads between March 2020 and February 2021. It reveals that nearly two thirds of the over 1 million creatives singled out were Adult (nudity, sex toys, adult entertainment) or Provocative (sexually suggestive content, profane language) ads. Other high risk categories include Alcohol and Political (big spike in inflammatory ads in October 2020). Another is Weapons, which has been on a disturbing incline since the November 2020 elections.

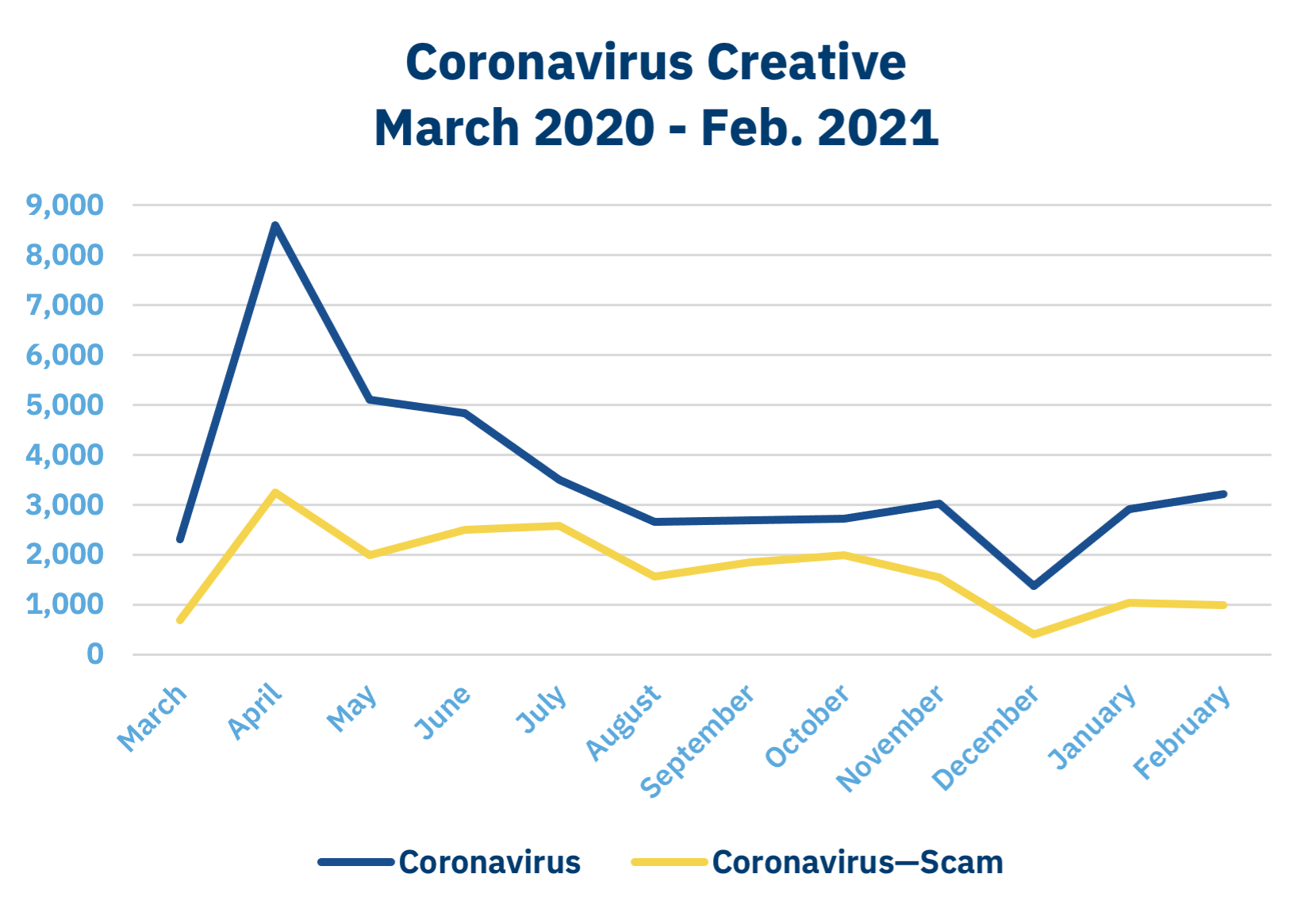

Bad creative goes beyond serving someone an ad with nudity or inflammatory subject matter. According to the report, nearly half of the ads related to coronavirus over this period were outright scams. These ranged from price-gouging on PPE to false or unverifiable claims about masks, air filters, and sanitizers. That isn’t just a risk to user experience. It’s also a safety concern for consumers being preyed upon by bad actors. On top of that, publishers face legal liability in heavily regulated categories such as Medical or Pharmaceutical.

Publishers have long been the last line of defense in protecting consumers from bad ads—particularly when it comes to malware and malvertising. DSPs and buying partners have shrugged off their responsibility in keeping consumers safe from objectionable ads and outright scams, all while watching revenue roll in.

Embracing responsibility

The good news is that many upstream partners like DSPs are starting to recognize the importance of complying with publisher creative policies. They are also realizing their responsibility in protecting consumers. Ensuring a high-quality ad experience is increasingly seen as good for business for buy-side platforms. So, they’re using AI-powered categorization systems to identify potentially offensive creative at scale.

The amount of creative violations detected in the second half of the period analyzed in “The Year in Sensitive Creative” was markedly lower than the first half. In particular, the amount of Adult creative has fallen 73% since November 2020. This suggests that advertisers either switched tactics or moved onto less stringent platforms.

But that’s still a problem. Too many unsavory AdTech providers are willing to look the other way on publisher brand safety. Leaving consumers vulnerable to offensive and manipulative ads should be unacceptable. This is particularly true given that technology to identify sensitive creatives at scale is readily available. It’s time all upstream AdTech companies are held accountable for the creatives they let loose in the programmatic ecosystem—such as disturbing full-head clam shell masks.

I’m afraid I can’t find the screenshot I took of that graphic mask… But trust me, you don’t want to see it.