This article was authored by Chris Olson, CEO at The Media Trust.

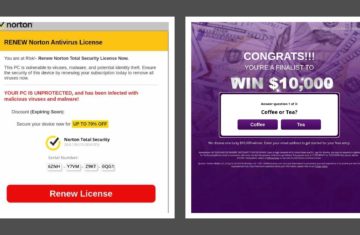

Just in time for Easter, a cybercrime gang has gifted iPhone users with a new malware attack: 500 million of them and counting. According to reports, the attack exploits an unpatched and obscure vulnerability in Google’s Chrome app, available through the native iOS app store. After serving up malvertising‘, the user is redirected to webpages with miscellaneous motives – scam contests, a malicious payload, solicitation for credit card details, etc.

Although it took researchers ten days to discover the new malware, this should have come as no surprise. While popular consciousness insists that the Apple ecosystem is “more secure” than competitors – a prejudice which survives the earliest days of computing – last November, a similar large-scale attack happened when iPhones were compromised by the PayLeak-3PC, which was followed by FourthColor-3PC a few short months later.

On iOS, PayLeak lures the user with fake ads, then delivers a series of convincing but fraudulent Apple Pay prompts asking for credit card information. What happens next does not require much imagination. But while that particular attack was thwarted, we are left to ask ourselves: what happened to Apple?

The Black Box Approach to Security

Early on in the smartphone wars, Apple distinguished itself from competitors by running an airtight app store. Unlike Google, the company set strict – and some would argue – standards that most applications find difficult to meet even today.

Most people don’t realize that the App Store was not part of Apple’s original plan, and the iPhone was never supposed to run third-party applications. Only after the persistent threat of jailbreaking, unlocking, and competitor sniping did Steve Jobs relent. As the revered Apple CEO once complained,

“We’re trying to do two diametrically opposed things at once—provide an advanced and open platform to developers while at the same time protect iPhone users from viruses, malware, privacy attacks”

While critics call out Apple for its “closed platform,” there is logic to this approach: opening up technology to outside developers creates vulnerabilities for which users will hold the creator liable. The motive for locking up MacBooks with new screws, soldered components, and software to prevent third party repairs isn’t just profit: it’s to retain control of the ecosystem.

At the risk of waxing philosophical, we equate security with black boxes. If you want to keep something safe, your first impulse is to wrap it up tightly. That keeps intruders and thieves away. Unfortunately – if someone is ever clever enough to get in – it also keeps them there, safely away from any discerning eye.

Opening the Black Box

Apple learned the hard way that you can’t keep third-party developers out of your product. To do so compromises user experience and ensures that less squeamish competitors win every time. That’s why third-parties provide 78% of the code executing on enterprise websites.

To keep users safe and avoid culpability for attacks, the answer isn’t to let those applications in and lock them up: the answer is to look inside. The famous Chinese general Sun Tzu once said,

“Know thy self, know thy enemy.”

And 21st century developers would do well to take heed. Only by knowing who is executing on your platforms can you “win a thousand battles, and a thousand victories.” Or, in the case of third-party code on the internet, “win millions of battles, and millions of victories.”