Product Marketing Lead Gavin Dunaway dives into strategies for identifying ads with sensitive subject matter, and why an AI-human hybrid approach is ideal.

Certainly an uptick in gambling ads was expected in 2021 as multiple states in the US loosened regulations around gambling. But what do you think the odds were on the 450% increase detected by The Media Trust since the beginning of 2021?

Some publishers may not be fazed by a huge influx in gambling ads—more money in the pot, as they say. But others are certainly thinking, “Do I want ads for sports betting and casinos all over my pages?” Juxtaposed with sports content, gambling ads probably fit in just fine, but on content for kids? Whoa Nelly, that’s not a good idea!

What about marijuana ads, which have increased 6X year over year? Dispensaries are opening up in numerous states in 2021, but marijuana is still illegal on the federal level. Should you treat marijuana ads like alcohol ads? Oh, how do you manage those beyond “Don’t serve to minors!”

And how do you feel about cryptocurrency ads, which have doubled since the beginning of 2021? Do you really want to wade your site/app into such a controversial topic?

The amount of potentially objectionable ad content is growing every month, and publishers are struggling to protect user experience and defend their brands.

Publisher Integrity at Stake

While advertiser concerns about content tend to dominate the brand safety debate, publishers need to protect their brands too from ad content that’s outright offensive or too sensitive. This goes beyond shutting out Nudity, Adult Content, and Adult Products to building policies around contentious subject matter like Gambling, Marijuana, Cryptocurrency, and even Political.

When monetizing programmatically, the subject matter of an ad that will occupy any given unit is pretty much a mystery for publishers. That lack of control doesn’t jive with excellent user experience, so many publishers are banging on the doors of their ad servers and SSPs with acceptable creative policies in hand.

Even with SSPs and DSPs trumpeting controls over sensitive creative subject matter, The Media Trust finds adult content ads with nudity show up on major publishers, as well as weapons and other undesirable and offensive creative. (On top of malware and scams as well)

While advertiser concerns about content tend to dominate the brand safety debate, publishers need to protect their brands too from ad content that’s outright offensive or too sensitive.

An Insufficient Approach to Categorization

But that’s because efforts to identify sensitive ad content have fallen short across the board. Many ad tech companies over-rely on advertiser self-classification in the bidstream based on the IAB Content Taxonomy. But the Content Taxonomy was designed for advertisers to understand media content for developing and executing brand suitability strategies.

It’s a great tool for advertiser brand safety—less so for publisher brand safety. While there is a group of “Sensitive Topics,” in the Content Taxonomy 3.0, there’s no cryptocurrency or coronavirus, an area some publishers would like to avoid entirely. There’s no specific marijauna or CBD/Hemp category, just a massive one called “Illegal Drugs/Tobacco/eCigarettes/ Vaping/Alcohol.”

In addition, the Content Taxonomy is open for abuse because it relies on advertisers acting in good faith. It’s a struggle to believe someone pushing a controversial product or service will be completely upfront—in fact, we’ve seen casinos try to label their creative “Entertainment.”

AI, With a Human Touch

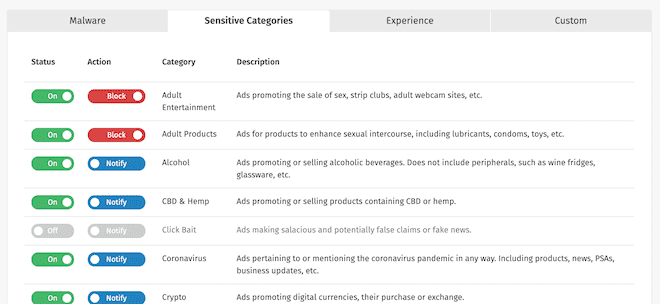

Obviously, some kind of independent verification of ad content is needed, which is where The Media Trust’s Ad Categorization system comes in. With input from major ad platforms and publishers, we built 40+ sensitive ad categories, including Nudity, Adult Content, Alcohol, Tobacco, Gambling, and many more. We then use AI with object and character recognition technology to analyze creatives against these categories at scale.

Some AI-powered categorization systems stop there, but we recognize machine learning is still… Well, learning. There are interesting quirks in its developmental curve, like labeling violins weapons (an argument can be made that violins are sonic weaponry, but I digress). When it comes to display, ad creative should be verified by human eyes. AI can tackle issues of scale, and then human analysts ensure accuracy. This AI-human hybrid approach enables us to boast an industry-low 2% false positive rate.

Opt for Precision

For DSPs and SSPs, The Media Trust feeds creative through Ad Categorization and identifies potentially problematic ad content. These ad platforms then decide how best to distribute these creatives (e.g., no beer ads to the tween pop culture site) or reject them.

Via our real-time ad quality management tool Media Filter, publishers can block 16 of the most sensitive categories, including Adult Content, Alcohol, Marijuana, Gambling, and Weapons. They can also set to be notified when flagged creative runs on their site to better gauge their tolerance level for various sensitive categories.

As the digital ad industry undergoes seismic shifts, publishers need to defend the brands their audiences trust. This means overarching control over the ad content served to the site. But this is no moment for over-blocking or letting potentially offensive creative slip through because of an insufficient taxonomy. The AI-human hybrid approach to ad categorization offers publishers—and the digital advertising industry at large—precision they can count on.

Take a look inside the reimagined Media Filter and sign up for a free trial.