Product Marketing Lead Gavin Dunaway explains how nuanced policies and responsive ad categorization can help ad platforms navigate even the most delicate of advertising subject matter.

In 2020, Twitter shocked the digital advertising landscape by announcing it would not run political advertising on its platform or its then-owned mobile advertising network MoPub. Then-CEO Jack Dorsey was highly concerned about spreading misinformation at scale, and decided eschewing the not-small political ad revenue opportunity was in society’s best interest.

But few advertising platforms agree with that stance—or want to leave so much revenue on the table, even if the subject matter is contentious or heavily regulated. The trick to thriving while serving ads with sensitive content is leveraging nuanced tools to filter problematic ad content from the benign… And filter at scale.

Nuance for the Win

With AdTech platforms ingesting massive volumes of ad creatives daily, there’s no time and not enough team members to manually investigate each tag for content issues. Stalling campaigns means lost revenue. At the same time, letting one objectionable ad campaign slip into the ad pipes and in front of consumers can have monstrous repercussions for business and potentially attract regulators.

That’s why major platforms use The Media Trust’s AI-powered Ad Categorization tool to flag ads with sensitive and regulated content, ripping through more than 6 million creatives a month. These DSPs and SSPs have specialized policies for managing delicate ad content categories, and new contentious products and services are always hitting the market. (Did someone say crypto? See Figure 1.)

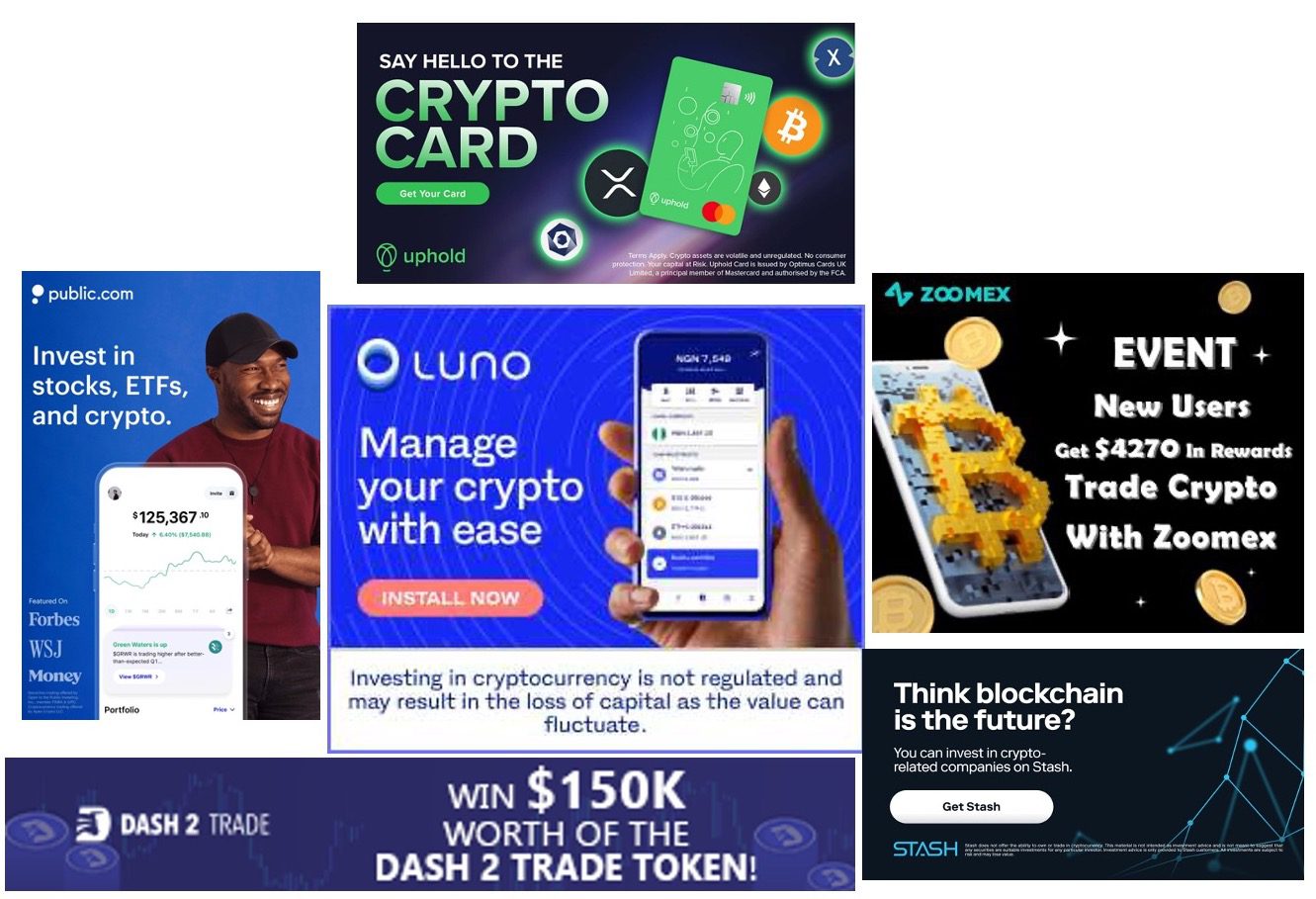

For example, as the 2022 US political season was heating up, it was clear AdTech platforms would need nuanced tools for scrutinizing political ads. So The Media Trust’s Ad Categorization team created four new subcategories for platforms to better analyze ad content: LGBTQ, Immigration, Gun Safety, and Abortion. (Figure 2.) In particular, ads with abortion content spiked right before the July 2022 Kansas abortion referendum.

Platforms weren’t necessarily looking to block all ads in any of these subcategories, just easily sort them out and see which complied with their content policies and those of downstream partners. Also by focusing on specific contentious issues, AdTech companies were better able to identify ads with misinformation as well as ones with outright inflammatory content.

An Array of Armaments

Another category where platforms have needed additional specification is weapons. This is a tricky area, and as we saw with 2022 political creative, there’s plenty of contention around gun rights and gun safety.

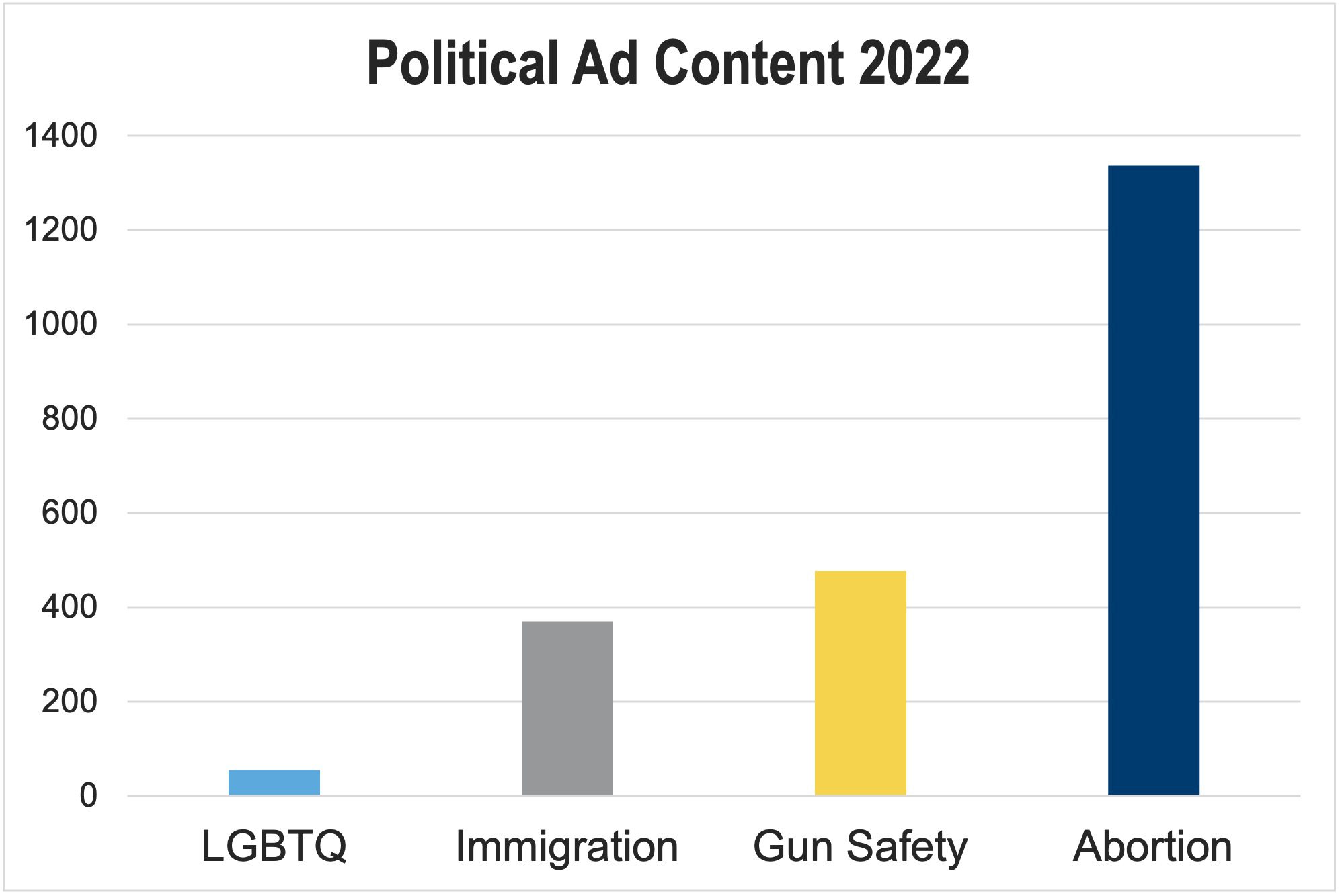

But guns, gun shows, and gun dealers aren’t the only advertisers out there (Figure 3):

- sport and fishing retailers market bows and arrows;

- dagger and swords are collectors items; and

- we’ve even run into ads for military-grade hardware like grenade launchers and surface-to-air missiles (Head of Marketplace Innovation Cory Schnurr likes to joke he was disappointed the call to action on that ad was “Learn More” instead of “Buy Now!).

There are markets for all these and specific online contexts where advertising makes sense. While an ad for a rifle might seem tasteless on an infant care site, it would certainly be appropriate next to an article with tips for preparing for hunting season.

Identifying actual weapons rather than tools and kitchen implements can also be a challenge—there’s a big difference between a steak knife and a machete. It requires (take a wild guess) nuance! That’s why we’re breaking down our overarching Weapons category into more specific smaller categories:

- Bladed Weapons. Weapons used to cut, hack, or slash such as knives, swords, and daggers. This doesn’t include tools such as axes and hatchets.

- Bows & Arrows. Ads containing weapons that launch arrows or darts, including but not limited to compound, long, and crossbows.

- Firearms. Guns! Or to be very specific: a portable barreled weapon that expels, is designed to expel or may be converted to expel a shot, bullet or projectile by the action of a propellant.

- Industrial Weapons. Artillery, rockets, missiles, etc.

- Mace/Pepper Spray. Ads promoting or selling sprays irritating to the eyes and respiratory passages, and meant to be used as a disabling weapon. (Don’t want to mistakenly rope in hot sauce!)

- Military Vehicles. Tanks, jets, attack helicopters, submarines, oh my!

- Tasers/Stun Guns. Weapons used to stun or immobilize by electric shock rather than kill or injure.

There are circumstances where advertising for each of these categories makes a lot of sense, and will be well received by consumers. There are also many circumstances in which they will be found tasteless and inappropriate. By making it easy for ad platforms to sort them out and send to the right channel, we’re helping make sure the former is more frequent than the latter.

Check out Cory Schnurr discussing the importance of nuanced sensitive ad content policies and what categories will become particularly contentious in 2023.

Tools + Policies + People

Twitter’s decision to ban political advertising in 2020 was drastic—and drastic decisions will be the right call on certain categories. Many ad platforms won’t touch marijuana-related advertising because it’s still criminalized under federal law; others avoid crypto because of the lack of regulation and the perceived proliferation of scams. And others find heavily regulated advertising categories like pharmaceuticals not worth the risk.

But with smart tools, any sensitive category of advertising content is manageable. Just like advertising technology, advertising content grows more complicated by the day. We’re constantly iterating our Ad Categorization tool to not only meet market needs, but also the requirements of individual clients—this includes building out custom highly specified custom categories.

At the same time, tools are only as good as the policies that fuel them, and then the humans that execute on those policies. AdTech platforms regarded as high-quality marketplaces develop highly nuanced policies around ads with sensitive content. For example, an image of a bladed weapon may be fine, but someone holding one (particularly in a threatening manner) might be a big no-no.

Marketplace quality specialists are essential for making tough judgement calls—they’re the key to ensuring consumers are complacent and downstream partners continue offering supply. It’s not an easy job, and unfortunately they don’t get as much credit as they deserve.

If you want to learn what Ad Categorization can do for your workflows and business, check out this page and feel free to request a demo!